While the tech industry is invested in AI and the as yet unimaginable opportunities it will deliver, in recent years there have been a number of troubling examples of AI either going wrong or being applied in problematic ways. They range from the ridiculous – soap dispensers that don’t work properly for customers with dark skin – to the disturbing – algorithms that predict if criminals will go back to prison. But what unites these scenarios is the fact that AI (arguably inadvertently) is coded with human bias.

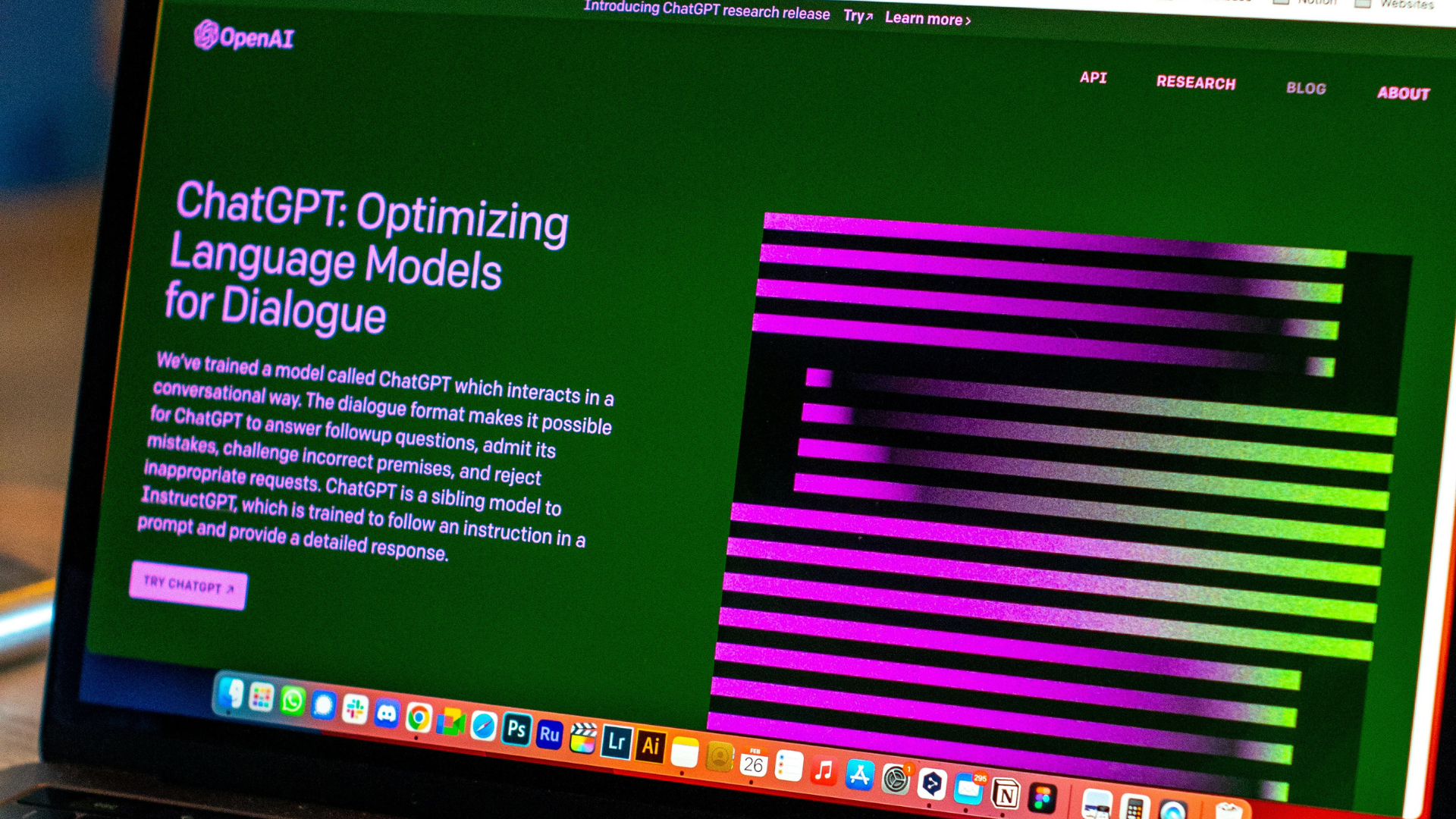

In an interesting example from last year, insurance firm Lemonade had to walk back claims made in a since deleted Twitter thread that it uses AI to scan customer faces for hints of fraud during their video claims process. The idea of being judged by AI for something as significant as an insurance claim was shocking to both its customers and anyone who saw the thread – particularly as we understand more and more that the way AI algorithms arrive at certain decisions might be quick but isn’t always fair. AI has been known to discriminate against certain races, genders, economic backgrounds, and disabilities, which in turn leads to many being denied housing, jobs, education, or justice. An insurance company that sells itself on the basis of essentially replacing human brokers and actuaries with bots and AI, collecting data about customers without them realizing they were giving it away only adds to feeling that the game is rigged.

Over the course of several tweets, Lemonade stated that it collects more than 1,600 “data points” about its users — “100X more data than traditional insurance carriers,” it said. The tweets didn’t clarify what those data points were or explain how and when they’re collected but claimed that they produce “nuanced profiles” and “remarkably predictive insights” which enable Lemonade to determine, in granular detail, its customers’ “level of risk.” On this basis, the company was then in position to improve its loss ratios i.e., collect more in premiums than it had to pay out in claims.

Following an outcry, Lemonade published a blog retracting its earlier statements about AI, declaring that it “does not use, and we’re not trying to build, AI that uses physical or personal features to deny claims”. While this attempt at course correction is understandable, it probably did little to allay people’s fears about how ethical lapses in AI might unjustly influence their lives.

One of AI’s biggest risks is human bias introduced in its coding. A study published by the AI Now Institute of New York concluded that a ‘diversity disaster’ has resulted in flawed AI systems that perpetuate gender and racial biases. That’s because humans are at the heart of AI: one of its main principles is that humans are in the loop that makes up a larger ecosystem, and as such, they must have the right skills to understand the implications of the decisions they are making. This is where diversity is crucial to ensure we’re not training systems to propagate inbuilt biases.

We have to avoid a scenario where AI can replicate and intensify the political and sociocultural conditions and power relations within which it is embedded, where attempts to use AI to address inequality might actually exacerbate the inequality it attempts to solve.

Beyond the moral imperative of diversity and inclusion in our workforce in its own right, diverse teams make better products that work for diverse sets of users and are better able to mitigate risks of discrimination/bias within algorithms.

AI isn’t a story about technology: AI is a story about people. Technology plays a part, but the bigger picture is about creating the right and safe environment as the foundation so that AI can flourish and grow to scale, resulting in fairer, more progressive societal and economic outcomes. What does it take for AI to be a positive force for change? The focus must be cultivating new and untapped sources of talent to make the technology sector more inclusive and diverse.