AI for example, is inadvertently being trained to reflect existing biases within society, and has ability to create new ways of racial discrimination —from increasing surveillance in lower income areas to monitoring visa applications. Technology continues to play an integral role in our lives, and with AI and facial recognition becoming increasingly dominant technologies, what existing biases can we already see in emerging technologies? And how do we create a solution to rid them of racial bias?

Racial bias in AI

While AI has the potential to drastically improve our lives, the challenges it brings may undermine its development. A report for the Council of Europe provides an in-depth analysis on the different ways in which AI can lead to discrimination. One example looks at businesses adopting an AI system for job applications. If a business was to use lateness as a factor to rule out applicants, then those living further away from the city could be discriminated against. While on the face of it, adopting AI may seem like a good way to help increase the number of relevant candidates, it could end up doing more harm than good.

AI typically uses existing data sets to “train” its capability in a given application, but bias can exists in that data itself, which can impact the AI decision-making process once deployed. The COMPAS recidivism algorithm has been proven to be racist in sentencing Black defendants more harshly by learning from biased training data.

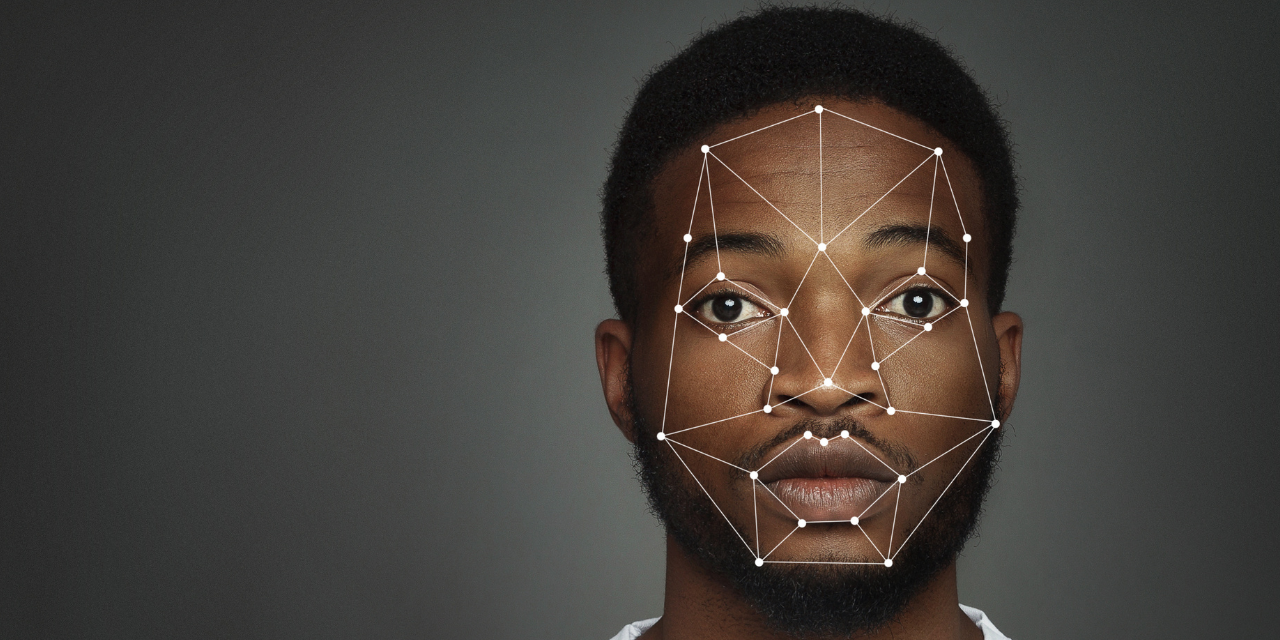

Live facial recognition (LFR) is another technology that has gained a lot of attention, specifically around its adoption by law enforcement as a way of preventing and detecting crime by helping police officers find wanted criminals. While LFR technology has been introduced on the grounds of public safety, it is quickly showing itself to be a technology that is contributing to the oppression of people of colour. According to a report from the Biometrics and Forensics Ethics Group (BFEG), the lack of representation of Black, Asian and other ethnic minority faces in LFR training data sets means these systems are more likely to identify innocent people as criminals.

While the ethical guidelines surrounding AI and facial recognition technology are broad, strategies need to be developed to avoid algorithmic injustice. The effectiveness of such tools need to be assessed before deployment, and greater oversight is needed to monitor how these systems are being used. Those who are developing technologies also need to take greater accountability, and we are starting to see some movement here, with large tech giants such as IBM, Microsoft and Amazon agreeing not to sell facial recognition software to law enforcement.

Racial identity and whiteness in tech

Not only is it important to understand racial bias, it is also important to understand racial identity within technology.

There has been some interesting research from the University of Canterbury looking at shooter bias when robots are assigned a race. The results are shocking—when a robot is racialised as Black, it is more likely to be shot at. The study also shows that participants are quicker to refrain from shooting at unarmed white agents compared to Black agents (regardless of them being humans or robots).

Exploring the racialisation of technology is important for two reasons. Firstly, because it has the potential to affect the functionality of tech such as robots in society, but secondly, because it reflects how whiteness is ingrained in society’s understanding of technological progress. In a world where robots have the potential to become teachers, carers or friends, this racial bias needs to be addressed as a matter of urgency.

Reimagining a future without racial bias: developing responsible AI practices

Firstly, we need to increase the number of Black, Asian and ethnic minorities at senior leadership levels of tech and AI companies, and ensure that executives are held responsible for developing this talent. We can’t expect to remove racial bias if the teams developing these technologies aren’t representative of society. The tech industry as a whole needs to do more if we are to truly pioneer change (check out our previous blog on diversity in tech for more detail).

Greater regulations or “responsibility frameworks” also need to be put in place. As is the case with many AI systems, there needs to be greater transparency around their purpose. It also requires us to ask the question, should these machines be designed at all? Developers should utilise a thorough risk assessment and adhere to ethical guidelines such as the EU’s Ethics guidelines for trustworthy AI when considering the design of AI systems.

Going one step beyond regulations, we may even need a multidisciplinary environment which includes psychologists, sociologists, economists, philosophers and more to understand the various social, economic and ethical impacts of AI.

AI technology is only going to become more ingrained in our society, and has the potential to drive real change. And although the advances in AI are incredibly promising, we must be more critical about AI and call out these shortcomings. Only by understanding the ways in which we can rid these systems of racial bias can we ensure that we are developing technology that benefits everyone, and the world, for the better.