But the biggest issue may not have been facing our own mortality. A day or so after the social media challenge went live, tech journalists were starting to report on the risks. Apparently, we were exposing ourselves to more than an imagined future, and millions had inadvertently opted into a surveillance state. When a user tapped a photo in their library that for the app to work its magic on, that photo was actually being uploaded to FaceApp’s servers in Russia, where the aging effects were applied by AI tech.

However, when you dug into FaceApp’s terms and conditions, they were really, really vague. There was no clear view of how, when, or if the company would be using this personal data:

You grant FaceApp a perpetual, irrevocable, nonexclusive, royalty-free, worldwide, fully-paid, transferable sub-licensable license to use, reproduce, modify, adapt, publish, translate, create derivative works from, distribute, publicly perform and display your User Content and any name, username or likeness provided in connection with your User Content in all media formats and channels now known or later developed, without compensation to you. When you post or otherwise share User Content on or through our Services, you understand that your User Content and any associated information (such as your [username], location or profile photo) will be visible to the public.

Ambiguous, right?

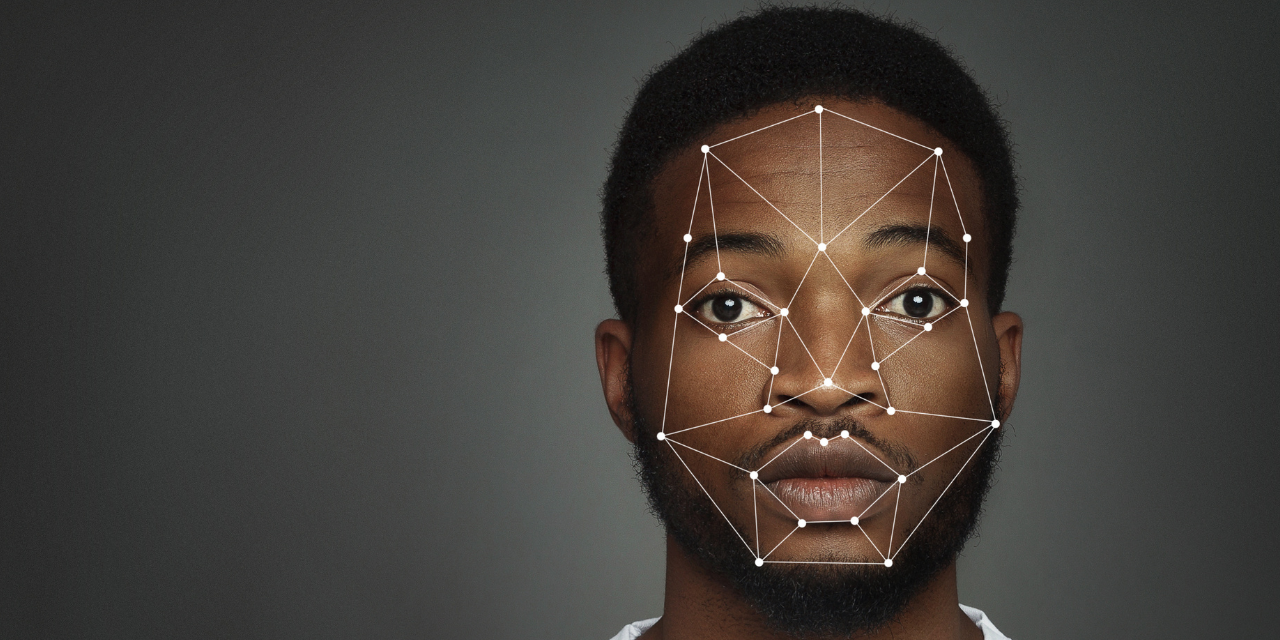

Next came the Kings Cross surveillance camera debacle, when it was revealed private owners were using facial recognition technology without telling the public. The story given was that there was nothing shady about how the data was being used. But at the same time there was zero information about how the data was being used. The Mayor of London, Sadiq Khan, wrote to Robert Evans, CEO of the Kings Cross development, to ask for more information. He also asked for “reassurance” that Evans had been “liaising with government ministers and the Information Commissioner’s Office to ensure its use is fully compliant with the law as it stands”.

What’s really, really concerning is that these systems are vulnerable to bias, as they’re more likely to misidentify women and people of colour. While we tend to think of technology as something neutral and lacking in the same prejudices as people, the simple fact is that algorithms built by people and trained using data sets collected by people are likely to reflect any unconscious bias.

Next up, September rolled around—and we were faced with the Zao phenomenon. A sophisticated app, based on deepfake technology and developed by a unit of Momo, one of China’s most popular dating apps, Zao creates videos that replace the faces of celebrities in scenes from popular movies, tv shows and music videos with a selfie uploaded by the user.

The app, currently available only in China, went viral as users shared their videos through WeChat and other social media platforms. But there are huge concerns about the potential misuse of deepfake technology. It can easily be used to spread misinformation, harass people or even be used for emotional or financial blackmail. There are endless ways this technology could be misused.

These three stories have hit the headlines in quick succession, and we’re only going to see more examples of how facial recognition technologies are growing in both sophistication and availability. The advent of 5G and the explosion of compute power means that even more will be possible on consumer devices—and ultimately around the area of data collection.

This is an incredibly complex area. The public sector is not (and should not) be privy to exactly the same laws that the private sector should be. But what is of the utmost importance is that the communications around these technologies remains ethical, and that those using it are upfront about using it. Privacy will continue to be one of the biggest issues the tech sector faces, and organisations must be as transparent as possible to manoeuvre in a shifting landscape of regulation and consumer demands.